About Me

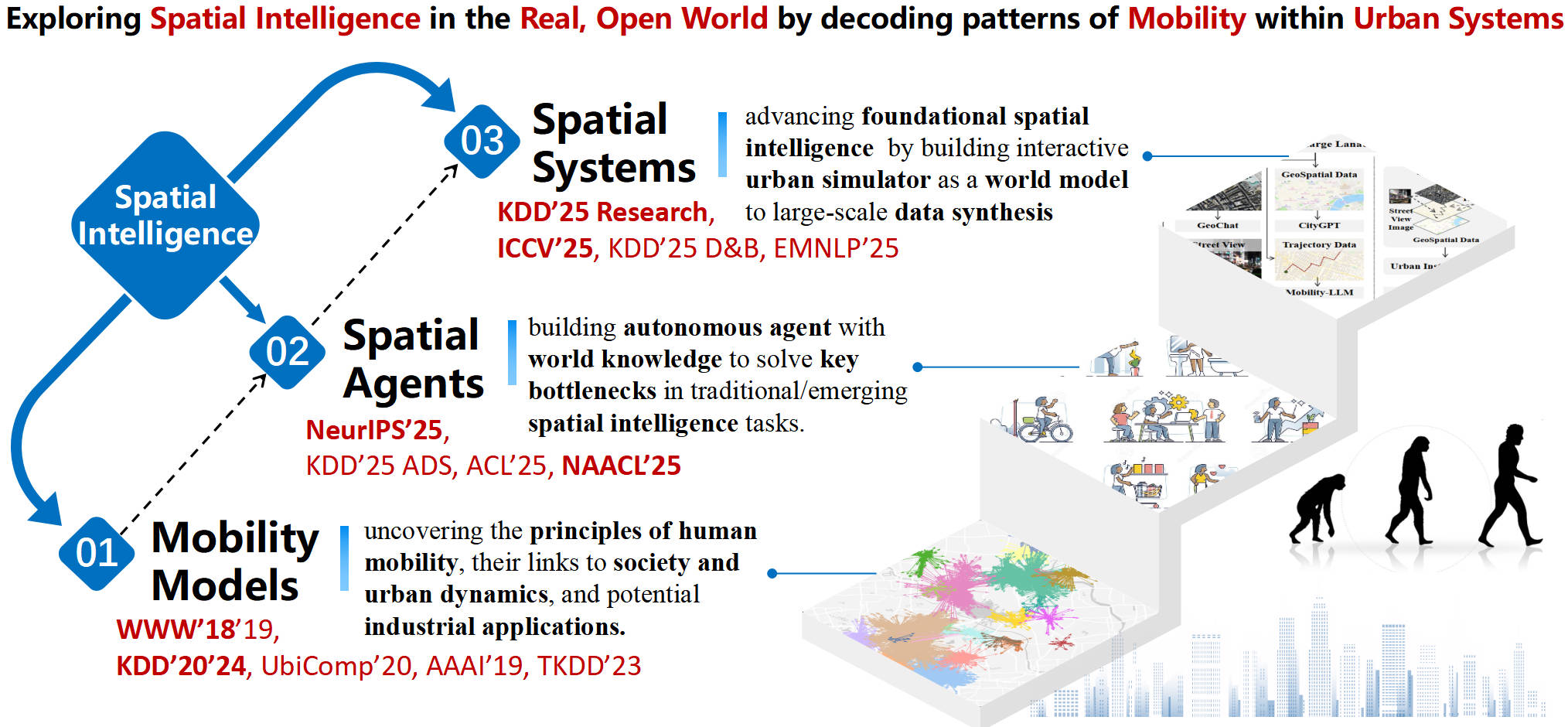

Jie Feng is currently a postdoctoral fellow in the Department of Electronic Engineering at Tsinghua University. His research mainly focuses on spatial intelligence, multi-modal large language models, human behavior modeling, urban science and spatiotemporal data mining, with over 40 papers published in top-tier venues including KDD, ICCV, ACL, WWW, EMNLP, NAACL, AAAI, UbiComp, TKDE, etc. His work has garnered more than 4000 citations on Google Scholar. From 2021 to 2023, he worked at Meituan as a researcher specializing in intelligent decision-making and large language models. He received his B.S. and Ph.D. degrees (advised by Prof. Yong Li) in electronic engineering from Tsinghua University in 2016 and 2021, respectively. His research is supported by the Shuimu Tsinghua Scholar Program.

I am actively seeking a faculty position. Should you find my research background and interests aligned with your institutional goals, I would welcome the opportunity to discuss potential openings. Please feel free to contact me at your convenience.

Research Interests

- Spatial Intelligence with Multi-modal Large Language Models in Open World, e.g., CityGPT-KDD2025, UrbanLLaVA-ICCV2025, CityBench-KDD2025, Hallucination-EMNLP2025.

- Building Intelligent Agents with Large Language Models for Real-world Applications, e.g., TrajAgent-NeurIPS2025, AgentMove-NAACL2025, PIGEON-ACL2025, AutoQual-EMNLP2025.

- Spatiotemporal Data Mining: trajectory mining, traffic forecasting, e.g., DeepMove-WWW2018, MoveSim-KDD2020, DeepSTN+-AAAI2019, UniST-KDD2024, SCDN-KDD2024.

- Urban Science: human dynamics, urban dynamics, in-depth and data-driven analyses of urban issues.

News

- New![2025.09] We release a new survey about embodied world models! Please check out the paper list on GitHub!

- New![2025.09] Two papers AutoQual and DORIS has been accepted by EMNLP 2025 Industry Track. Congratulations to Xiaochong Lan!

- New![2025.09] Our paper TrajAgent has been accepted by NeurIPS 2025. Congratulations to Yuwei Du!

- New![2025.08] Our paper on geospatial knowledge hallucination has been accepted by EMNLP 2025 Findings. Congratulations to Shengyuan Wang!

- New![2025.08] Two papers about mobility modelling have been accepted by SIGSPATIAL 2025, inlcuding MoveGCL and UniMove. Congratulations to Yuan Yuan and Chonghua Han!

- New![2025.06] Our survey on world models has been published in ACM Computing Surveys. Check out our gularly updated paper list at World-Model on GitHub. Open to discussions and collaborations!

- New![2025.06] Our paper UrbanLLaVA has been accepted by ICCV 2025! Welcom to checkout our Project Website for more information!

- New![2025.06] I am honored to have been selected as an Excellent Reviewer for KDD 2025.

- New![2025.05] Three papers have been accepted by KDD 2025, including CityGPT, CityBench and LocalGPT!

- New![2025.05] Two papers have been accepted by ACL 2025, including Benchmarking Basic Spatial Abilities and PIGEON!

- New![2025.04] I am glad to be selected as one of 2025 Spatial Data Intelligence Rising Star! news.

- New![2025.01] AgentMove has been accepted as a main conference paper at NAACL 2025, and the code has been released. Feel free to try it out!

- [2024.12] The datasets and implementation codes for CityGPT and CityBench projects are now open-source. Welcome any suggestions and collaboration!

- [2024.10] We released a preprint paper about urban simulation platform with massive LLM-based agents, opencity.

- [2024.10] I am glad to be selected as one of Stanford/Elsevier Top 2% Scientists 2024.

- [2024.05] Two papers have been accepted in KDD 2024! High Efficiency Delivery Network and UniST.

- [2023.12] We released a preprint paper about the Urban Generative Intelligence (UGI) in the era of large language models. For more details, please refer to arxiv.

- [2023.04] Meituan’s Real-Time Intelligent Dispatching Algorithms wins the INFORMS 2023 Edelman Finalist Reward. I am glad to contribute to the part “Divide-and-Conquer” framework, the algorithm details about this is introduced in the High Efficiency Delivery Network.

Selected Publications

* Equal contribution; # Correspondence; † Work done as Intern in the Lab

Spatial Intelligence with Large Language Models

- UrbanLLaVA: A Multi-modal Large Language Model for Urban Intelligence

Jie Feng, Shengyuan Wang, Tianhui Liu, Yanxin Xi, Yong Li

ICCV 2025 (CCF A) Codes | PDF | Project Website - CityGPT: Empowering Urban Spatial Cognition of Large Language Models

Jie Feng*, Tianhui Liu*, Yuwei Du, Siqi Guo, Yuming Lin, Yong Li

KDD 2025 Research (CCF A) Codes | PDF | Project Website - CityBench: Evaluating the Capabilities of Large Language Models for Urban Tasks

Jie Feng*, Jun Zhang*, Tianhui Liu*, Xin Zhang, Tianjian Ouyang, Junbo Yan, Yuwei Du, Siqi Guo, Yong Li

KDD 2025 D&B (CCF A) Codes PDF - Mitigating Geospatial Knowledge Hallucination in Large Language Models: Benchmarking and Dynamic Factuality Aligning

Shengyuan Wang, Jie Feng#, Tianhui Liu, Dan Pei, Yong Li

EMNLP 2025 Findings (CCF B) Codes PDF

Human Behavior Analytics and Agentic Modeling

- TrajAgent: An LLM-Agent Framework for Trajectory Modeling via Large-and-Small Model Collaboration

Yuwei Du*, Jie Feng*#, Jie Zhao, Yong Li#

NeurIPS 2025 (CCF A) Codes | PDF | Project Website - AgentMove: A Large Language Model based Agentic Framework for Zero-shot Next Location Prediction

Jie Feng, Yuwei Du, Jie Zhao, Yong Li

NAACL 2025 Main (CCF B) Codes PDF - Harvesting Efficient On-Demand Order Pooling from Skilled Couriers: Enhancing Graph Representation Learning for Refining Real-time Many-to-One Assignments

Yile Liang, Jiuxia Zhao, Donghui Li, Jie Feng#, Chen Zhang, Xuetao Ding, Jinghua Hao, Renqing He

KDD 2024 ADS (CCF A) PDF Link - DeepMove: Predicting Human Mobility with Atentional Recurrent Networks

Jie Feng, Yong Li, Chao Zhang, Funing Sun, Fanchao Meng, Ang Guo, Depeng Jin.

WWW 2018 (CCF A) Codes(star 151) | PDF | Link | #5 Most Influential Paper in WWW 2018

Spatiotemporal Data Mining and Time Series Modeling

- UniST: A Prompt-Empowered Universal Model for Urban Spatio-Temporal Prediction

Yuan Yuan, Jingtao Ding, Jie Feng, Depeng Jin, Yong Li

KDD 2024 Research (CCF A) Codes(star 180) | PDF | Link | #3 Most Influential Paper at KDD 2024 - Dynamic graph convolutional recurrent network for traffic prediction: benchmark and solution

Fuxian Li, Jie Feng, Huan Yan, Guangyin Jin, Fan Yang, Funing Sun, Depeng Jin, Yong Li

TKDD 2023 (CCF B) Codes(star 296) | PDF | Link | #1 Most Cited Paper in TKDD 2023 - DeepSTN+: Context-aware Spatial-Temporal Neural Network for Crowd Flow Prediction in Metropolis

Ziqian Lin*, Jie Feng*, Ziyang Lu, Yong Li, Depeng Jin

AAAI 2019 (CCF A) Codes(star 66) PDF Link

Survey

- “A Survey of Embodied World Models”, Yu Shang, Yinzhou Tang, Xin Zhang, et al. preprint 2025.09.

- “AI Agent Behavioral Science.”, Lin Chen, Yunke Zhang, Jie Feng, et al. preprint 2025.06.

- “A Survey of Large Language Model-Powered Spatial Intelligence Across Scales: Advances in Embodied Agents, Smart Cities, and Earth Science.”, Jie Feng, Jinwei Zeng, et al. preprint 2025.04. Chinese article by Zhuanzhi.

- “Urban Generative Intelligence (UGI): A foundational platform for agents in embodied city environment.”, Fengli Xu, Jun Zhang, Chen Gao, Jie Feng, Yong Li. preprint 2023.12

- “Towards Large Reasoning Models: A Survey of Reinforced Reasoning with Large Language Models.”, Fengli Xu, Qianyue Hao, et al. Cell Patterns 2025

- “Understanding World or Predicting Future? A Comprehensive Survey of World Models.”, Jingtao Ding, Yunke Zhang, et al. ACM Computing Surveys 2025

- “Dynamic graph convolutional recurrent network for traffic prediction: Benchmark and solution.”, Fuxian Li, Jie Feng, et al. ACM TKDD 2023

Talks

- “Utilizing LLM-based Agents for Human Mobility Modelling”, Invited Talk, PCC Young Scholars Forum @ HMCC&HHME2025, Dalian

- “LLM-Powered Spatial Intelligence Across Scales: Advances in Embodied Agents, Smart Cities, and Earth Science”, Tutorial, ACM SIGSPATIAL CHINA Saptial Data Intelligence 2025 Conference, Xiamen

- “LLM-Based Agentic Framework: A Novel Paradigm for Modeling Human Mobility”, Invited Talk, ACM SIGSPATIAL CHINA Saptial Data Intelligence 2025 Conference, Xiamen

- “CityGPT: From LLM to Urban Generative Intelligence”, Invited Talk, CCF ChinaData 2024 Conference, Boao